Data engineers build data pipelines that transform raw, unstructured data into formats data scientists can use for analysis. They are responsible for creating and maintaining the analytics infrastructure that enables almost every other data function. This includes architectures such as databases, servers, and large-scale processing systems.

Learn about the salary and job description of a data engineer, plus key data engineer skills, roles, and responsibilities in this online guide.

What Is a Data Engineer?

Data engineers prepare and transform data using pipelines. This involves extracting data from various data source systems, transforming it into the staging area, and loading it into a data warehouse system. This process is known as ETL (Extract, Transform, Load).

What Does a Data Engineer Do?

A data engineer’s job is to organize the collection, processing, and storing of data from different sources. To do this, data engineers need an in-depth knowledge of SQL and other database solutions such as Cassandra and Bigtable.

Learn more about how to become a data engineer here.

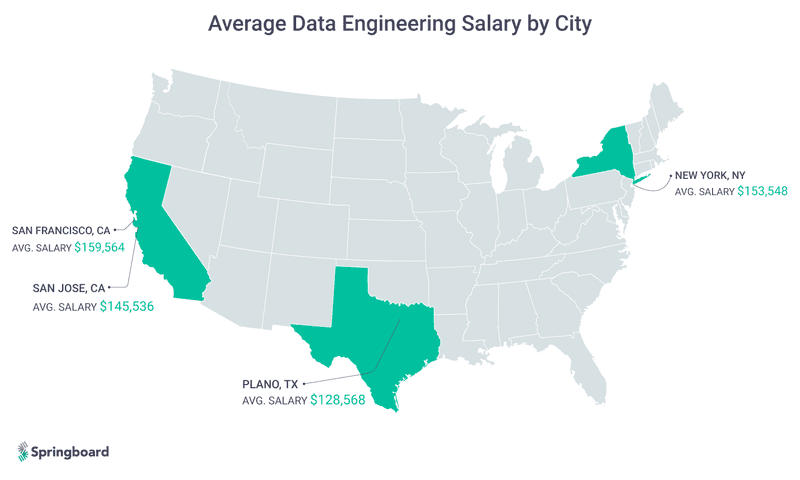

What Is the Average Salary of a Data Engineer?

Data engineers earn an average salary of $127,983, according to Indeed. Top companies for data engineers include Netflix, Facebook, Target, and Capital One. An entry-level data engineer with less than one year of experience can expect to earn $77,361, including tips, bonus, and overtime pay, according to Payscale.

The five highest-paying cities for data engineers are as follows:

- San Francisco: $159,564

- New York: $153,548

- San Jose: $145,536

- Los Angeles: $132,076

- Plano: $128,568

What Is a Typical Data Engineering Job Description?

Data engineers are typically responsible for finding and analyzing patterns in datasets. This requires transforming large amounts of data into formats that can be processed and analyzed. The role of a data engineer requires significant technical skills, including multiple programming languages and knowledge of SQL and AWS technologies.

Related: Data Engineer vs. Data Analyst: Salary, Skills, & Background

The level of skills and the foundational knowledge required varies widely from junior data engineering job descriptions to senior data engineering job descriptions.

The job description of a data engineer usually contains clues on what programming languages a data engineer needs to know, the company’s preferred data storage solutions, and some context on the teams the data engineer will work with.

Potential data engineering candidates will be expected to:

- Create and maintain optimal data pipeline architecture

- Assemble large, complex data sets that meet business requirements

- Identify, design, and implement internal process improvements

- Optimize data delivery and re-design infrastructure for greater scalability

- Build the infrastructure required for optimal extraction, transformation, and loading of data from a wide variety of data sources using SQL and AWS technologies

- Build analytics tools that utilize the data pipeline to provide actionable insights into customer acquisition, operational efficiency, and other key business performance metrics

- Work with internal and external stakeholders to assist with data-related technical issues and support data infrastructure needs

- Create data tools for analytics and data scientist team members

Learn more about how to become a data engineer here.

What Are the Key Skills of a Data Engineer?

Data engineers need to be literate in programming languages used for statistical modeling and analysis, data warehousing solutions, and building data pipelines, as well as possess a strong foundation in software engineering.

While data engineers job specs will vary across different industries, most hiring managers focus on:

- Database systems like SQL and NoSQL

- Data warehousing solutions

- ETL tools

- Machine learning

- Data APIs

- Python, Java, and Scala programming languages

- Understanding the basics of distributed systems

- Knowledge of algorithms and data structures

Since data engineers can come from different educational backgrounds, soft skills are also important to many employers. The following skills are useful to have when competing for a data engineering role:

- Communication skills

- Collaboration skills

- Presentation skills

Learn more about the types of essential skills required to become a data engineer here.

Get To Know Other Data Science Students

Samuel Okoye

IT Consultant at Kforce

Meghan Thomason

Data Scientist at Spin

Diana Xie

Machine Learning Engineer at IQVIA

3 Common Data Engineer Job Roles

Data engineer job profiles vary widely between companies. The scope of these roles depends largely on the size of the company, the maturity of its data operations, and the volume of data collected.

- Small companies: A data engineer on a small team may be responsible for every step of data flow, from configuring data sources to managing analytical tools. In other words, they would architect, build and manage databases, data pipelines, and data warehouses—basically doing the work of a full-stack data scientist. (check out to know more about data science).

- Mid-size companies: In a mid-sized company, data engineers work side by side with data scientists to build whatever custom tools they need to accomplish certain big data analytics goals. They oversee data integration tools that connect data sources to a data warehouse. These pipelines either simply transfer information from one place to another or carry out more specific tasks.

- Large companies: In a large enterprise with highly complex data needs, a typical data engineer job spec requires data engineers to focus on setting up and populating analytics databases, tuning them for fast analysis, and creating table schemas. This involves ETL (Extract, Transfer, Load) work, which refers to how data is taken (extracted) from a source, converted (transformed) into a format that can be analyzed and stored (loaded) into a data warehouse.

Learn more about key data engineering roles here.

What Are the Key Responsibilities of a Data Engineer?

Data engineers are responsible for building and maintaining an organization’s data infrastructure, including databases, data warehouses, and data pipelines. A typical data engineer profile requires the transformation of data into a format that is useful for analysis.

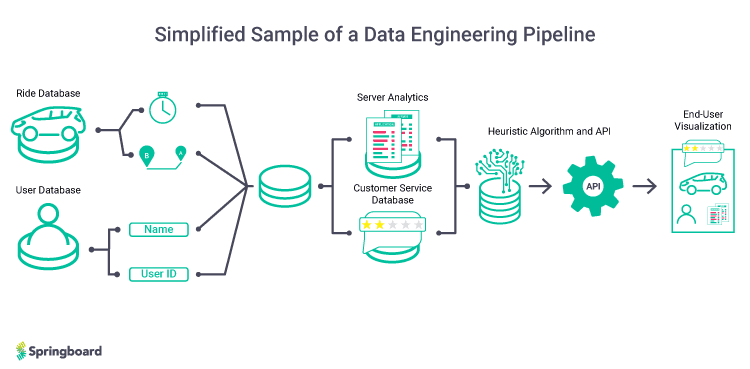

This starts with cleaning, organizing, and processing raw, unstructured data. Data pipelines refer to the design of systems for processing and storing data. These systems capture, cleanse, transform and route data to destination systems, taking raw data from a SaaS platform such as a CRM system or email marketing tool and storing it in a data warehouse so it can be analyzed using analytics and business intelligence tools.

Case Study: A Data Engineer’s Role Inside Uber

To better understand the work of a data engineer, consider the back-end database structure for a mobile app service like Uber. A data engineer is responsible for building and maintaining this system in its entirety, which consists of:

- The database for the main app containing user and driver information

- Ride database (information about a single ride as well as status information)

- Server analytics logs, which contains access logs and error logs of server requests made in the app

- App analytics logs (event logs containing records of what actions users and drivers took in the app)

- Customer service database (information about customer interactions with customer service agents)

Since you’re here…Are you interested in this career track? Investigate with our free guide to what a data professional actually does. When you’re ready to build a CV that will make hiring managers melt, join our Data Science Bootcamp which will help you land a job or your tuition back!