You might be a machine learning project first-timer, a hardened AI veteran, or even a tenured professor researching state-of-the-art artificial intelligence—at any skill level, you are likely knowledgeable about PyTorch or TensorFlow.

But beyond what you already know, what are the key comparisons between PyTorch and TensorFlow? Which one is better for your project’s needs? We’ll explain the strengths of each and the best opportunities to apply them.

Learn about the key differences between PyTorch and TensorFlow, and which one best suits your machine learning project.

*Looking for the Colab Notebook for this post? Find it right here.*

What is TensorFlow? What is PyTorch?

PyTorch and TensorFlow are two of the biggest names in machine learning frameworks. They are tools to help you quickly design, evaluate, and deploy neural networks at competitive performance levels. PyTorch is primarily developed by Facebook’s AI Research (FAIR) group, while TensorFlow is overseen by Google AI.

It’s not surprising that both PyTorch and TensorFlow are popular, among the Data Scientists and ML Engineers, given that they’re both developed by two of the biggest names on the Internet and in machine learning research. But their popularity extends beyond the name of their parent companies. PyTorch and TensorFlow are among the most advanced machine learning tools in the industry and are built off of many of the same ideas.

For long-term support, both PyTorch and TensorFlow are open-source—anyone with a Github account can contribute to the newest versions of both—so the most recent research is often available instantaneously on each platform.

Both PyTorch and TensorFlow have remained the fastest-in-class frameworks by performing as many of their computations as possible onto accelerated hardware such as GPUs (which are processors that can perform many operations in parallel). Both PyTorch and TensorFlow use the same GPU framework cuDNN by NVIDIA.

Under-the-hood, PyTorch and TensorFlow also use a similar concept, dubbed data flow graphs, to translate the code that you write into hardware-accelerated machine code. A data flow graph describes the mathematical operations necessary to compute a neural net’s outputs, and the data flow graph can be inverted (which is a process known as automatic differentiation), to compute the infamous backpropagation of a neural net without requiring the developer to specify how gradients are computed.

Get To Know Other Data Science Students

Rane Najera-Wynne

Data Steward/data Analyst at BRIDGE

Ginny Zhu

Data Science Intern at Novartis

Leoman Momoh

Senior Data Engineer at Enterprise Products

TensorFlow vs. PyTorch: What’s the difference?

You’ve seen now that PyTorch and TensorFlow share many of the same elements, but each has unique application opportunities.

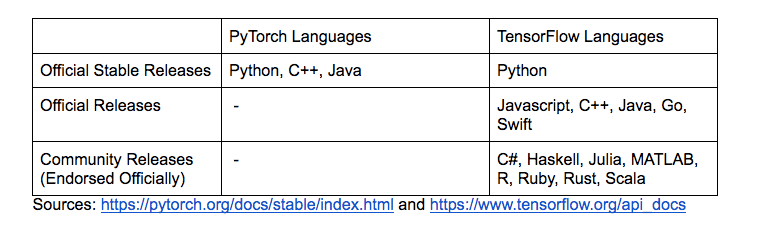

For one, TensorFlow has experienced the benefits of open-source contributions somewhat differently—as community members have actively developed TensorFlow APIs in many languages beyond what TensorFlow officially supports—and TensorFlow has been quick to embrace this development.

PyTorch has less official support of its third-party adaptations but devotes its time to providing stability to non-Python languages. The following table summarizes this difference.

Historically, the data flow graphs of PyTorch and TensorFlow were generated differently. TensorFlow used to (pre-version 2.0) compile its data flow graphs before running computations on the data flow graph, known as a static graph. On the flip side, PyTorch used to build its data flow graph while it’s executing, known as a dynamic graph. Nowadays, both PyTorch and TensorFlow can compute static or dynamic graphs, but their origins have allowed for slight advantages.

Static graphs allow the framework to perform all the possible hardware optimizations for speed that it can before running code on hardware, but it loses some flexibility. For instance, if you need to alter the neural network architecture at runtime, in the instance of Network Pruning to shrink a model’s size, then the framework must recompile the graph whereas a dynamic graph can get ahead—think of it as TensorFlow making a pit stop mid-race. Since TensorFlow started out with static graphs, its static graphs are slightly more optimized than PyTorch.

PyTorch distinguishes its dynamic data flow graphs from TensorFlow by using a technique known as data asynchronization to keep up to speed with static graphs on GPUs. PyTorch keeps track of how much data the GPU can handle at any given moment and adjusts what data is given to the GPU to ensure that it is always running at full power. TensorFlow’s compilation may result in some decreased GPU compute loads during an execution, losing some speed as well.

TensorFlow’s big advantage over PyTorch lies in Google’s very own Tensor Processing Units (TPUs), a specially designed computer that is far faster than GPUs for most neural network computations. If you can use a TPU, available through Google Cloud, then TensorFlow is sure to outperform the same PyTorch computation, as PyTorch does not natively support TPUs.

Finally, industry insiders use TensorFlow and PyTorch in different ways. Many industry professionals prefer TensorFlow, due to its optimizations with TPUs, wide-variety of supported languages, and battle-tested robustness. Many researchers instead opt for PyTorch as it is easier to develop experimental network architectures.

PyTorch vs. TensorFlow: Which is better?

To choose between PyTorch and TensorFlow, consider your needs and experience.

If you care only about the speed of the final model and are willing to use TPUs, then TensorFlow will run as fast as you could hope for. If you care about speed but are using a GPU, then TensorFlow and PyTorch have similar enough performance that whichever you have a preference for and develop fast enough in may be the best choice.

If you’re learning either framework for the first time, PyTorch and TensorFlow are both ubiquitous enough that learning a new framework, to contribute in data science or machine learning field, if not both, will be well worth your time. It will take some work, but Springboard can help you become well-versed in either, and both, frameworks.

Since you’re here…Are you interested in this career track? Investigate with our free guide to what a data professional actually does. When you’re ready to build a CV that will make hiring managers melt, join our Data Science Bootcamp which will help you land a job or your tuition back!