A Quick Look at Text Mining in R

This tutorial was built for people who wanted to learn the essential tasks required to process text for meaningful analysis in R, one of the most popular and open source programming languages for data science. At the end of this tutorial, you’ll have developed the skills to read in large files with text and derive meaningful insights you can share from that analysis. You’ll have learned how to do text mining in R, an essential data mining tool. The tutorial is built to be followed along with tons of tangible code examples. The full repository with all of the files and data is here if you wish to follow along.

Searching for a job using R? Check out our list of R Interview Questions first!

If you don’t have an R environment set up already, the easiest way to follow along would be to use Jupyter with R. Jupyter offers an interactive R environment where you can easily modify inputs and get the outputs demonstrated rapidly so you can rapidly get up to speed on text mining in R.

Text mining definition

Natural languages (English, Hindi, Mandarin etc.) are different from programming languages. The semantic or the meaning of a statement depends on the context, tone and a lot of other factors. Unlike programming languages, natural languages are ambiguous.

Text mining deals with helping computers understand the “meaning” of the text. Some of the common text mining applications include sentiment analysis e.g if a Tweet about a movie says something positive or not, text classification e.g classifying the mails you get as spam or ham etc.

In this tutorial, we’ll learn about text mining and use some R libraries to implement some common text mining techniques. We’ll learn how to do sentiment analysis, how to build word clouds, and how to process your text so that you can do meaningful analysis with it.

R

R is succinctly described as “a language and environment for statistical computing and graphics,” which makes it worth knowing if you’re dabbling in the data science/art of statistics and exploratory data analysis. For data scientists who are working with statistical analysis, knowing R is a must. R has a wide variety of useful packages for data science and machine learning.

Here, we’ll focus on R packages useful in understanding and extracting insights from the text and text mining packages.

In this tutorial, we will be using the following packages:

- RSQLite, ‘SQLite’ Interface for R

- tm, framework for text mining applications

- SnowballC, text stemming library

- Wordcloud, for making wordcloud visualizations

- Syuzhet, text sentiment analysis

- ggplot2, one of the best data visualization libraries

- quanteda, N-grams

You can install the aforementioned packages using the following command:

install.package(“package name”)

Text preprocessing

Before we dive into analyzing text, we need to preprocess it. Text data contains white spaces, punctuations, stop words etc. These characters do not convey much information and are hard to process. For example, English stop words like “the”, “is” etc. do not tell you much information about the sentiment of the text, entities mentioned in the text, or relationships between those entities. Depending upon the task at hand, we deal with such characters differently. This will help isolate text mining in R on important words.

Get To Know Other Data Science Students

Bret Marshall

Software Engineer at Growers Edge

Bryan Dickinson

Senior Marketing Analyst at REI

Samuel Okoye

IT Consultant at Kforce

Word cloud

A word cloud is a simple yet informative way to understand textual data and to do text analysis. In this example, we will try to visualize Hillary Clinton’s Emails. This will help us quantify the content of the Emails and help us derive insights and better communicate our results Along the way, we’ll also learn about some data preprocessing steps that will be immensely helpful in other text mining tasks as well. Let’s start with getting the data. You can head over to Kaggle to download the dataset.

Let’s read the data and learn to implement the preprocessing steps.

[code lang=”r” toolbar=”true” title=”Reading data in with R”]library(RSQLite)

db <- dbConnect(dbDriver(“SQLite”), “/Users/shubham/Documents/hillary-clinton-emails/database.sqlite”)

# Get all the emails sent by Hillary

emailHillary <- dbGetQuery(db, “SELECT ExtractedBodyText EmailBody FROM Emails e INNER JOIN Persons p ON e.SenderPersonId=P.Id WHERE p.Name=’Hillary Clinton’ AND e.ExtractedBodyText != ” ORDER BY RANDOM()”)

emailRaw <- paste(emailHillary$EmailBody, collapse=” // “)

[/code]

The above code reads in the “database.sqlite” file into R. SQLite is an embedded SQL database engine. Unlike most other SQL databases, SQLite does not have a separate server process. SQLite reads and writes directly to ordinary disk files. So, you can read an SQLite file just as you would read a CSV or a text file. Accordingly, the same theory would apply to any type of CSV or text file or input file that you can work with in R, though you would use a different approach.

This guide shows how you would read different file formats such as Excel, R and .txt files into R and other data sources (including social media data).

Here, we’ll use the package RSQLite to read in a SQLite file containing all of Hillary Clinton’s emails. Next, we will be querying the column containing the Email text body. Then we’ll be ready to do an analysis of the Clinton emails that shaped this political season.

We’ll perform the following steps to make sure that the text mining in R we’re dealing with is clean:

- Convert the text to lower case, so that words like “write” and “Write” are considered the same word for analysis

- Remove numbers

- Remove English stopwords e.g “the”, “is”, “of”, etc

- Remove punctuation e.g “,”, “?”, etc

- Eliminate extra white spaces

- Stemming our text

Stemming is the process of reducing inflected (or sometimes derived) words to their word stem, base or root form. E.g changing “car”, “cars”, “car’s”, “cars’” to “car”. This can also help with different verb tenses with the same semantic meaning such as digs, digging, and dig.

One very useful library to perform the aforementioned steps and text mining in R is the “tm” package. The main structure for managing documents in tm is called a Corpus, which represents a collection of text documents.

[code lang=”r” toolbar=”true” title=”Cleaning text in R”]

# Transform and clean the text

library(“tm”)

docs <- Corpus(VectorSource(emailRaw))[/code]

Once we have our email corpus (all of Hillary’s emails) stored in the variable “docs”, we’ll want to modify the words within the emails in it with the techniques we discussed above such as stemming, stopword removal and more. With the tm library, this can be done easily. Transformations are done via the tm_map() function which applies a function to all elements of the corpus. Basically, all transformations work on single text documents and tm_map() just applies them to all documents in a corpus. If you wanted to convert all the text of Hillary’s emails into lowercase at once, you’d use the tm library and the techniques below to do so easily.

[code lang=”r” toolbar=”true” title=”Using the TM library to process text”]

# Convert the text to lower case

docs <- tm_map(docs, content_transformer(tolower))

# Remove numbers

docs <- tm_map(docs, removeNumbers)

# Remove english common stopwords

docs <- tm_map(docs, removeWords, stopwords(“english”))

# Remove punctuations

docs <- tm_map(docs, removePunctuation)

# Eliminate extra white spaces

docs <- tm_map(docs, stripWhitespace)

[/code]

To stem text, we will need another library, known as SnowballC.

[code lang=”r” toolbar=”true” title=”Using the SnowballC library to stem text”]

# Text stemming (reduces words to their root form)

library(“SnowballC”)

docs <- tm_map(docs, stemDocument)

# Remove additional stopwords

docs <- tm_map(docs, removeWords, c(“clintonemailcom”, “stategov”, “hrod”))

[/code]

A document term matrix is an important representation for text mining in R tasks and an important concept in text analytics. Each row of the matrix is a document vector, with one column for every term in the entire corpus.

Naturally, some documents may not contain a given term, so this matrix is sparse. The value in each cell of the matrix is the term frequency.

tm makes it very easy to create the term-document matrix. With the document term matrix made, we can then proceed to build a word cloud for Hillary’s emails, highlighting which words are the most frequently made.

[code lang=”r” toolbar=”true” title=”Using the SnowballC library to stem text”]

dtm <- TermDocumentMatrix(docs)

m <- as.matrix(dtm)

v <- sort(rowSums(m),decreasing=TRUE)

d <- data.frame(word = names(v),freq=v)

head(d, 10)

[/code]

[code lang=”r” toolbar=”true” title=”Generating a wordcloud of Hillary’s emails”]

# Generate the WordCloud

library(“wordcloud”)

library(“RColorBrewer”)

par(bg=”grey30″)

png(file=”WordCloud.png”,width=1000,height=700, bg=”grey30″)

wordcloud(d$word, d$freq, col=terrain.colors(length(d$word), alpha=0.9), random.order=FALSE, rot.per=0.3 )

title(main = “Hillary Clinton’s Most Used Used in the Emails”, font.main = 1, col.main = “cornsilk3”, cex.main = 1.5)

dev.off()

[/code]

Sentiment Analysis

Sentiment analysis is the process of determining whether a piece of writing is positive, negative or neutral. Here, we’ll work with the package “syuzhet”.

Just as the previous example, we’ll read the Emails from the database.

[code lang=”r” toolbar=”true” title=”Read emails into syuzhet”]

Emails <- data.frame(dbGetQuery(db,”SELECT * FROM Emails”))

library(‘syuzhet’)

[/code]

“syuzhet” uses NRC Emotion lexicon. The NRC emotion lexicon is a list of words and their associations with eight emotions (anger, fear, anticipation, trust, surprise, sadness, joy, and disgust) and two sentiments (negative and positive).

The get_nrc_sentiment function returns a data frame in which each row represents a sentence from the original file. The columns include one for each emotion type was well as the positive or negative sentiment valence. It allows us to take a body of text and return which emotions it represents — and also whether the emotion is positive or negative.

[code lang=”r” toolbar=”true” title=”Do sentiment analysis of Hillary’s emails”]

d<-get_nrc_sentiment(Emails$RawText)

td<-data.frame(t(d))

td_new <- data.frame(rowSums(td[2:7945]))

#The function rowSums computes column sums across rows for each level of a grouping variable.

#Transformation and cleaning

names(td_new)[1] <- “count”

td_new <- cbind(“sentiment” = rownames(td_new), td_new)

rownames(td_new) <- NULL

td_new2<-td_new[1:8,]

[/code]

Now, we’ll use “ggplot2” to create a bar graph. Each bar represents how prominent the each of the emotion is in text.

[code lang=”r” toolbar=”true” title=”Graph the sentiment analysis in ggplot2″]

#Visualisation

library(“ggplot2”)

qplot(sentiment, data=td_new2, weight=count, geom=”bar”,fill=sentiment)+ggtitle(“Email sentiments”)

[/code]

N-grams

You must have noticed YouTube’s auto-captioning feature. Auto-captioning is a speech recognition problem. One of the features in being able to generate captions automatically from audio is to predict what word comes after a given sequence of words. E.g

I’d like to make a …

Hopefully, you concluded that the next word in the sequence is “call”. We do this by first analyzing what words frequently co-occur. We formalize this by introducing N-grams. An n-gram is a contiguous sequence of n items from a given sequence of text or speech. In other words, we’ll be finding collocations. a collocation is a sequence of words or terms that co-occur more often than would be expected by chance. An example of this would be the term “very much.”

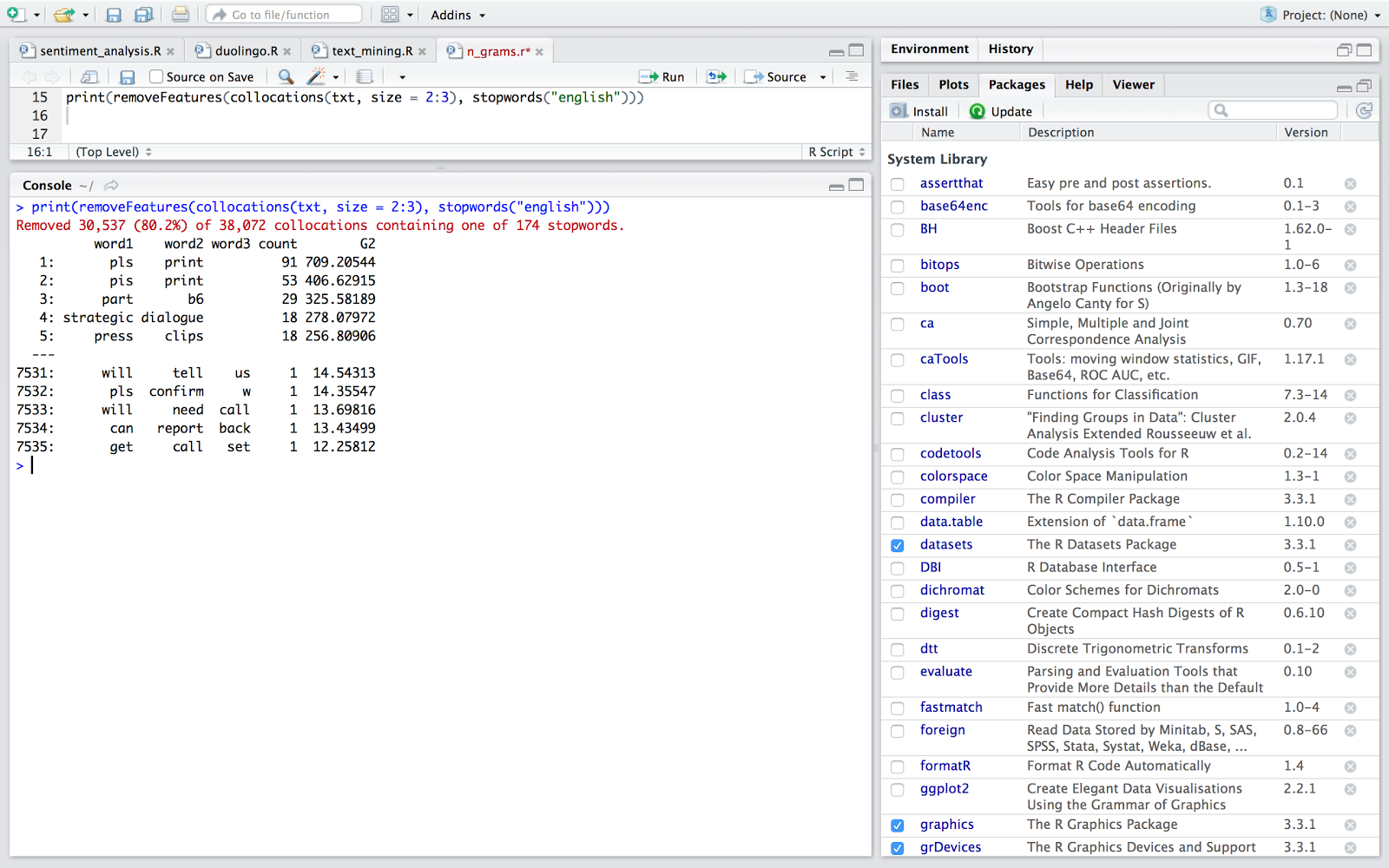

In this section, we’ll use the R-library “quanteda” to compute tri-grams to find commonly occuring sequence of 3 words.

[code lang=”r” toolbar=”true” title=”Calculating trigrams in quanteda”]

library(tm)

library(RSQLite)

library(quanteda)

db <- dbConnect(dbDriver(“SQLite”), “/Users/shubham/Documents/hillary-clinton-emails/database.sqlite”)

# Get all the emails sent by Hillary

emailHillary <- dbGetQuery(db, “SELECT ExtractedBodyText EmailBody FROM Emails e INNER JOIN Persons p ON e.SenderPersonId=P.Id WHERE p.Name=’Hillary Clinton’

AND e.ExtractedBodyText != ” ORDER BY RANDOM()”)

emails <- paste(emailHillary$EmailBody, collapse=” // “)

[/code]

We will use quanteda’s function collocations to do so. And, finally we’ll remove stopwords from the collocations so we can get a full view of which are the most frequently used collection of three words in Hillary’s emails.

[code lang=”r” toolbar=”true” title=”Remove stopwords”]

collocations(emails, size = 2:3)

print(removeFeatures(collocations(emails, size = 2:3), stopwords(“english”)))

[/code]

Conclusion

We set out to inform you how to do some of the most common text mining in R tasks with examples and sample code. Leave a comment below if you think we’re missing something or if you want to add something to this text mining in R discussion!

Companies are no longer just collecting data. They’re seeking to use it to outpace competitors, especially with the rise of AI and advanced analytics techniques. Between organizations and these techniques are the data scientists – the experts who crunch numbers and translate them into actionable strategies. The future, it seems, belongs to those who can decipher the story hidden within the data, making the role of data scientists more important than ever.

In this article, we’ll look at 13 careers in data science, analyzing the roles and responsibilities and how to land that specific job in the best way. Whether you’re more drawn out to the creative side or interested in the strategy planning part of data architecture, there’s a niche for you.

Is Data Science A Good Career?

Yes. Besides being a field that comes with competitive salaries, the demand for data scientists continues to increase as they have an enormous impact on their organizations. It’s an interdisciplinary field that keeps the work varied and interesting.

10 Data Science Careers To Consider

Whether you want to change careers or land your first job in the field, here are 13 of the most lucrative data science careers to consider.

Data Scientist

Data scientists represent the foundation of the data science department. At the core of their role is the ability to analyze and interpret complex digital data, such as usage statistics, sales figures, logistics, or market research – all depending on the field they operate in.

They combine their computer science, statistics, and mathematics expertise to process and model data, then interpret the outcomes to create actionable plans for companies.

General Requirements

A data scientist’s career starts with a solid mathematical foundation, whether it’s interpreting the results of an A/B test or optimizing a marketing campaign. Data scientists should have programming expertise (primarily in Python and R) and strong data manipulation skills.

Although a university degree is not always required beyond their on-the-job experience, data scientists need a bunch of data science courses and certifications that demonstrate their expertise and willingness to learn.

Average Salary

The average salary of a data scientist in the US is $156,363 per year.

Data Analyst

A data analyst explores the nitty-gritty of data to uncover patterns, trends, and insights that are not always immediately apparent. They collect, process, and perform statistical analysis on large datasets and translate numbers and data to inform business decisions.

A typical day in their life can involve using tools like Excel or SQL and more advanced reporting tools like Power BI or Tableau to create dashboards and reports or visualize data for stakeholders. With that in mind, they have a unique skill set that allows them to act as a bridge between an organization’s technical and business sides.

General Requirements

To become a data analyst, you should have basic programming skills and proficiency in several data analysis tools. A lot of data analysts turn to specialized courses or data science bootcamps to acquire these skills.

For example, Coursera offers courses like Google’s Data Analytics Professional Certificate or IBM’s Data Analyst Professional Certificate, which are well-regarded in the industry. A bachelor’s degree in fields like computer science, statistics, or economics is standard, but many data analysts also come from diverse backgrounds like business, finance, or even social sciences.

Average Salary

The average base salary of a data analyst is $76,892 per year.

Business Analyst

Business analysts often have an essential role in an organization, driving change and improvement. That’s because their main role is to understand business challenges and needs and translate them into solutions through data analysis, process improvement, or resource allocation.

A typical day as a business analyst involves conducting market analysis, assessing business processes, or developing strategies to address areas of improvement. They use a variety of tools and methodologies, like SWOT analysis, to evaluate business models and their integration with technology.

General Requirements

Business analysts often have related degrees, such as BAs in Business Administration, Computer Science, or IT. Some roles might require or favor a master’s degree, especially in more complex industries or corporate environments.

Employers also value a business analyst’s knowledge of project management principles like Agile or Scrum and the ability to think critically and make well-informed decisions.

Average Salary

A business analyst can earn an average of $84,435 per year.

Database Administrator

The role of a database administrator is multifaceted. Their responsibilities include managing an organization’s database servers and application tools.

A DBA manages, backs up, and secures the data, making sure the database is available to all the necessary users and is performing correctly. They are also responsible for setting up user accounts and regulating access to the database. DBAs need to stay updated with the latest trends in database management and seek ways to improve database performance and capacity. As such, they collaborate closely with IT and database programmers.

General Requirements

Becoming a database administrator typically requires a solid educational foundation, such as a BA degree in data science-related fields. Nonetheless, it’s not all about the degree because real-world skills matter a lot. Aspiring database administrators should learn database languages, with SQL being the key player. They should also get their hands dirty with popular database systems like Oracle and Microsoft SQL Server.

Average Salary

Database administrators earn an average salary of $77,391 annually.

Data Engineer

Successful data engineers construct and maintain the infrastructure that allows the data to flow seamlessly. Besides understanding data ecosystems on the day-to-day, they build and oversee the pipelines that gather data from various sources so as to make data more accessible for those who need to analyze it (e.g., data analysts).

General Requirements

Data engineering is a role that demands not just technical expertise in tools like SQL, Python, and Hadoop but also a creative problem-solving approach to tackle the complex challenges of managing massive amounts of data efficiently.

Usually, employers look for credentials like university degrees or advanced data science courses and bootcamps.

Average Salary

Data engineers earn a whooping average salary of $125,180 per year.

Database Architect

A database architect’s main responsibility involves designing the entire blueprint of a data management system, much like an architect who sketches the plan for a building. They lay down the groundwork for an efficient and scalable data infrastructure.

Their day-to-day work is a fascinating mix of big-picture thinking and intricate detail management. They decide how to store, consume, integrate, and manage data by different business systems.

General Requirements

If you’re aiming to excel as a database architect but don’t necessarily want to pursue a degree, you could start honing your technical skills. Become proficient in database systems like MySQL or Oracle, and learn data modeling tools like ERwin. Don’t forget programming languages – SQL, Python, or Java.

If you want to take it one step further, pursue a credential like the Certified Data Management Professional (CDMP) or the Data Science Bootcamp by Springboard.

Average Salary

Data architecture is a very lucrative career. A database architect can earn an average of $165,383 per year.

Machine Learning Engineer

A machine learning engineer experiments with various machine learning models and algorithms, fine-tuning them for specific tasks like image recognition, natural language processing, or predictive analytics. Machine learning engineers also collaborate closely with data scientists and analysts to understand the requirements and limitations of data and translate these insights into solutions.

General Requirements

As a rule of thumb, machine learning engineers must be proficient in programming languages like Python or Java, and be familiar with machine learning frameworks like TensorFlow or PyTorch. To successfully pursue this career, you can either choose to undergo a degree or enroll in courses and follow a self-study approach.

Average Salary

Depending heavily on the company’s size, machine learning engineers can earn between $125K and $187K per year, one of the highest-paying AI careers.

Quantitative Analyst

Qualitative analysts are essential for financial institutions, where they apply mathematical and statistical methods to analyze financial markets and assess risks. They are the brains behind complex models that predict market trends, evaluate investment strategies, and assist in making informed financial decisions.

They often deal with derivatives pricing, algorithmic trading, and risk management strategies, requiring a deep understanding of both finance and mathematics.

General Requirements

This data science role demands strong analytical skills, proficiency in mathematics and statistics, and a good grasp of financial theory. It always helps if you come from a finance-related background.

Average Salary

A quantitative analyst earns an average of $173,307 per year.

Data Mining Specialist

A data mining specialist uses their statistics and machine learning expertise to reveal patterns and insights that can solve problems. They swift through huge amounts of data, applying algorithms and data mining techniques to identify correlations and anomalies. In addition to these, data mining specialists are also essential for organizations to predict future trends and behaviors.

General Requirements

If you want to land a career in data mining, you should possess a degree or have a solid background in computer science, statistics, or a related field.

Average Salary

Data mining specialists earn $109,023 per year.

Data Visualisation Engineer

Data visualisation engineers specialize in transforming data into visually appealing graphical representations, much like a data storyteller. A big part of their day involves working with data analysts and business teams to understand the data’s context.

General Requirements

Data visualization engineers need a strong foundation in data analysis and be proficient in programming languages often used in data visualization, such as JavaScript, Python, or R. A valuable addition to their already-existing experience is a bit of expertise in design principles to allow them to create visualizations.

Average Salary

The average annual pay of a data visualization engineer is $103,031.

Resources To Find Data Science Jobs

The key to finding a good data science job is knowing where to look without procrastinating. To make sure you leverage the right platforms, read on.

Job Boards

When hunting for data science jobs, both niche job boards and general ones can be treasure troves of opportunity.

Niche boards are created specifically for data science and related fields, offering listings that cut through the noise of broader job markets. Meanwhile, general job boards can have hidden gems and opportunities.

Online Communities

Spend time on platforms like Slack, Discord, GitHub, or IndieHackers, as they are a space to share knowledge, collaborate on projects, and find job openings posted by community members.

Network And LinkedIn

Don’t forget about socials like LinkedIn or Twitter. The LinkedIn Jobs section, in particular, is a useful resource, offering a wide range of opportunities and the ability to directly reach out to hiring managers or apply for positions. Just make sure not to apply through the “Easy Apply” options, as you’ll be competing with thousands of applicants who bring nothing unique to the table.

FAQs about Data Science Careers

We answer your most frequently asked questions.

Do I Need A Degree For Data Science?

A degree is not a set-in-stone requirement to become a data scientist. It’s true many data scientists hold a BA’s or MA’s degree, but these just provide foundational knowledge. It’s up to you to pursue further education through courses or bootcamps or work on projects that enhance your expertise. What matters most is your ability to demonstrate proficiency in data science concepts and tools.

Does Data Science Need Coding?

Yes. Coding is essential for data manipulation and analysis, especially knowledge of programming languages like Python and R.

Is Data Science A Lot Of Math?

It depends on the career you want to pursue. Data science involves quite a lot of math, particularly in areas like statistics, probability, and linear algebra.

What Skills Do You Need To Land an Entry-Level Data Science Position?

To land an entry-level job in data science, you should be proficient in several areas. As mentioned above, knowledge of programming languages is essential, and you should also have a good understanding of statistical analysis and machine learning. Soft skills are equally valuable, so make sure you’re acing problem-solving, critical thinking, and effective communication.

Since you’re here…Are you interested in this career track? Investigate with our free guide to what a data professional actually does. When you’re ready to build a CV that will make hiring managers melt, join our Data Science Bootcamp which will help you land a job or your tuition back!