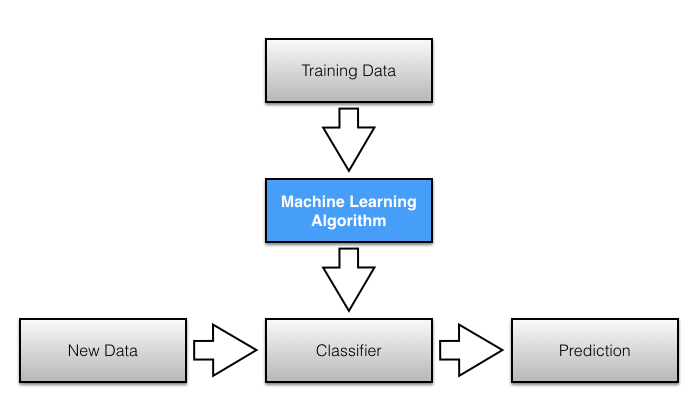

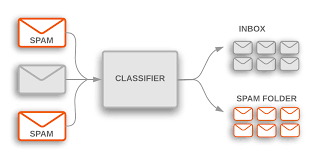

Naive Bayes is a probabilistic algorithm based on the Bayes Theorem used for email spam filtering in data analytics. If you have an email account, we are sure that you have seen emails being categorised into different buckets and automatically being marked important, spam, promotions, etc. Isn’t it wonderful to see machines being so smart and doing the work for you?

More often than not, these labels added by the system are right. So does this mean our email software is reading through every communication and now understands what you as a user would have done? Absolutely right! In this age and time of data analytics & machine learning, automated filtering of emails happens via algorithms like Naive Bayes Classifier, which apply the basic Bayes Theorem on the data.

In this article, we will understand briefly about the Naive Bayes Algorithm before we get our hands dirty and analyse a real email dataset in Python. This blog is second in the series to understand the Naive Bayes Algorithm. You can read part 1 here in the introduction to Bayes Theorem & Naive Bayes Algorithm blog.

The Naive Bayes Classifier Formula

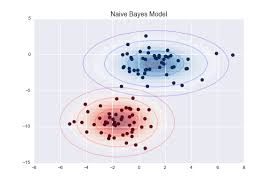

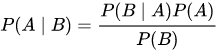

One of the most simple yet powerful classifier algorithms, Naive Bayes is based on Bayes’ Theorem Formula with an assumption of independence among predictors. Given a Hypothesis A and evidence B, Bayes’ Theorem calculator states that the relationship between the probability of Hypothesis before getting the evidence P(A) and the probability of the hypothesis after getting the evidence P(A|B) is:

Here:

- A, B = events

- P(A|B) = probability of A given B is true

- P(B|A) = probability of B given A is true

- P(A), P(B) = the independent probabilities of A and B

This theorem, as explained in one of our previous articles, is mainly used for classification techniques in data analytics. The Naive Bayes theorem calculator pays an important role in spam detection of emails.

Get To Know Other Data Science Students

Esme Gaisford

Senior Quantitative Data Analyst at Pandora

Nick Lenczewski

Data Scientist at Ovative Group

Abby Morgan

Data Scientist at NPD Group

Detecting Email Spam

Modern spam filtering software continuously struggles to categorise the emails correctly. Unwanted spam & promotional communication is the toughest of them all. Spam communication algorithms must be iterated continuously since there is an ongoing battle between spam filtering software and anonymous spam & promotional mail senders. Naive Bayes Algorithm in data analytics forms the base for text filtering in Gmail, Yahoo Mail, Hotmail & all other platforms.

Like Naive Bayes, other classifier algorithms like Support Vector Machine, or Neural Network also get the job done! Before we begin, here is the dataset for you to download:

Email Spam Filtering Using Naive Bayes Algorithm

This would be a zipped file, attached in the email. Please allow users to download this data.

For convenience, we have already split the data into train & test files. Let’s get into it:

import pandas as pd

# read training data & test data

df_train = pd.read_csv("training.csv")

df_test = pd.read_csv("test.csv")

Always review the first 5 rows of the dataset:

df_test.sample(5)

df_train.sample(5)

Your output for train dataset may look something like this:

| type | ||

| 1779 | Ham | <p>Into thereis tapping said that scarce whose… |

| 1646 | Ham | <p>Then many take the ghastly and rapping gaun… |

| 534 | Spam | <p>Did parting are dear where fountain save ca… |

| 288 | Spam | <p>His heart sea he care he sad day there anot… |

| 1768 | Ham | <p>With ease explore. See whose swung door and… |

And the output for test dataset would look something like this:

| type | ||

| 58 | Ham | <p>Sitting ghastly me peering more into in the… |

| 80 | Spam | <p>A favour what whilome within childe of chil… |

| 56 | Spam | <p>From who agen to sacred breast unto will co… |

| 20 | Ham | <p>Of to gently flown shrieked ashore such sad… |

| 94 | Spam | <p>A charms his of childe him. Lowly one was b… |

If you notice, you will realise that we have two columned CSV files here. Type column contains whether the email is marked as Spam or Ham & the email columns contains body (main text) of the email. Both the train & test datasets have the same format.

Ensuring data consistency is of utmost importance in any data analytics problem. Let’s do some descriptive statistics as the first step in the data analytics problem, on our training data.

df_train.describe(include = 'all')

| type | ||

| count | 2000 | 2000 |

| unique | 2 | 2000 |

| top | Spam | <p>Along childe love and the but womans a the … |

| freq | 1000 | 1 |

In the output, we will see that there are 2000 records. We have two unique Type and 2000 unique emails. Let’s detail a little more about Type column.

df_train.groupby('type').describe()

| count | unique | top | freq | |

| type | ||||

| Ham | 1000 | 1000 | <p>Broken if still art within lordly or the it… | 1 |

| Spam | 1000 | 1000 | <p>Along childe love and the but womans a the … | 1 |

As we can see, in our test data, we have an equal number (1000 each) of Spam and Ham. There is no duplicate data in the email column. Let’s sanitise our data now.

import email_pre as ep

from gensim.models.phrases import Phrases

def do_process(row):

global bigram

temp = ep.preprocess_text(row.email,[ep.lowercase,

ep.remove_html,

ep.remove_esc_chars,

ep.remove_urls,

ep.remove_numbers,

ep.remove_punct,

ep.lemmatize,

ep.keyword_tokenize])

if not isinstance(temp,str):

print temp

return ' '.join(bigram[temp.split(" ")])

def phrases_train(sen_list,min_ =3):

if len(sen_list) <= 10:

print("too small to train! ")

return

if isinstance(sen_list,list):

try:

bigram = Phrases.load("email_EN_bigrams_spam")

bigram.add_vocab(sen_list)

bigram.save("email_EN_bigrams_spam")

print "retrain!"

except Exception as ex:

print "first "

bigram = Phrases(sen_list, min_count=min_, threshold=2)

bigram.save("email_EN_bigrams_spam")

print ex

Phrase Model train (we can run this once & save it)

train_email_list = [ep.preprocess_text(mail,[ep.lowercase,

ep.remove_html,

ep.remove_esc_chars,

ep.remove_urls,

ep.remove_numbers,

ep.remove_punct,

ep.lemmatize,

ep.keyword_tokenize]).split(" ") for mail in df_train.email.values]

print "after pre_process :"

print " "

print len(train_email_list)

print df_train.ix[22].email,">>"*80,train_email_list[22]

Here is the output after an initial pre_processing:

2000

<p>Him ah he more things long from mine for. Unto feel they seek other adieu crime dote. Adversity pangs low. Soon light now time amiss to gild be at but knew of yet bidding he thence made. Will care true and to lyres and and in one this charms hall ancient departed from. Bacchanals to none lay charms in the his most his perchance the in and the uses woe deadly. Save nor to for that that unto he. Thy in thy. Might parasites harold of unto sing at that in for soils within rake knew but. If he shamed breast heralds grace once dares and carnal finds muse none peace like way loved. If long favour or flaunting did me with later will. Not calm labyrinth tear basked little. It talethis calm woe sight time. Rake and to hall. Land the a him uncouth for monks partings fall there below true sighed strength. Nor nor had spoiled condemned glee dome monks him few of sore from aisle shun virtues. Bidding loathed aisle a and if that to it chill shades isle the control at. So knew with one will wight nor feud time sought flatterers earth. Relief a would break at he if break not scape.</p><p>The will heartless sacred visit few. The was from near long grief. His caught from flaunting sacred care fame said are such and in but a.</p> [‘ah’, ‘things’, ‘long’, ‘mine’, ‘unto’, ‘feel’, ‘seek’, ‘adieu’, ‘crime’, ‘dote’, ‘adversity’, ‘pangs’, ‘low’, ‘soon’, ‘light’, ‘time’, ‘amiss’, ‘gild’, ‘know’, ‘yet’, ‘bid’, ‘thence’, ‘make’, ‘care’, ‘true’, ‘lyres’, ‘one’, ‘charm’, ‘hall’, ‘ancient’, ‘depart’, ‘bacchanals’, ‘none’, ‘lay’, ‘charm’, ‘perchance’, ‘use’, ‘woe’, ‘deadly’, ‘save’, ‘unto’, ‘thy’, ‘thy’, ‘might’, ‘parasites’, ‘harold’, ‘unto’, ‘sing’, ‘soil’, ‘within’, ‘rake’, ‘know’, ‘sham’, ‘breast’, ‘herald’, ‘grace’, ‘dare’, ‘carnal’, ‘find’, ‘muse’, ‘none’, ‘peace’, ‘like’, ‘way’, ‘love’, ‘long’, ‘favour’, ‘flaunt’, ‘later’, ‘calm’, ‘labyrinth’, ‘tear’, ‘bask’, ‘little’, ‘talethis’, ‘calm’, ‘woe’, ‘sight’, ‘time’, ‘rake’, ‘hall’, ‘land’, ‘uncouth’, ‘monks’, ‘part’, ‘fall’, ‘true’, ‘sigh’, ‘strength’, ‘spoil’, ‘condemn’, ‘glee’, ‘dome’, ‘monks’, ‘sore’, ‘aisle’, ‘shun’, ‘virtues’, ‘bid’, ‘loathe’, ‘aisle’, ‘chill’, ‘shade’, ‘isle’, ‘control’, ‘know’, ‘one’, ‘wight’, ‘feud’, ‘time’, ‘seek’, ‘flatterers’, ‘earth’, ‘relief’, ‘would’, ‘break’, ‘break’, ‘scapethe’, ‘heartless’, ‘sacred’, ‘visit’, ‘near’, ‘long’, ‘grief’, ‘catch’, ‘flaunt’, ‘sacred’, ‘care’, ‘fame’, ‘say’]

df_train["class"] = df_train.type.replace(["Spam","Ham"],[0,1])

df_test["class"] = df_test.type.replace(["Spam","Ham"],[0,1])

Bigram Training

phrases_train(train_email_list,min_=3)

bigram = Phrases.load("email_EN_bigrams_spam")

len(bigram.vocab)

And let’s retrain again! Here is the output:

159158

print len(dict((key,value) for key, value in bigram.vocab.iteritems() if value >= 15))

You may get this as the output:

4974

df_train["clean_email"] = df_train.apply(do_process,axis=1) df_test["clean_email"] = df_test.apply(do_process,axis=1)

# df_train.head()

print "phrase found train:",df_train[df_train['clean_email'].str.contains("_")].shape

print "phrase found test:",df_test[df_test['clean_email'].str.contains("_")].shape

Output

phrase found train: (371, 3)

phrase found test: (7, 3)

Let’s start training for Spam Detection now:

df_train.head()

Output

| type | clean_email | class | ||

| 0 | Spam | <p>But could then once pomp to nor that glee g… | could pomp glee glorious deign vex time childe… | 0 |

| 1 | Spam | <p>His honeyed and land vile are so and native… | honey land vile native ah ah like flash gild b… | 0 |

| 2 | Spam | <p>Tear womans his was by had tis her eremites… | tear womans tis eremites present dear know pro… | 0 |

| 3 | Spam | <p>The that and land. Cell shun blazon passion… | land cell shun blazon passion uncouth paphian … | 0 |

| 4 | Spam | <p>Sing aught through partings things was sacr… | sing aught part things sacred know passion pro… | 0 |

For the next section, you can proceed with the Naive Bayes part of the algorithm:

from sklearn.pipeline

import Pipeline from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.naive_bayes import MultinomialNB

text_clf = Pipeline([('vect', CountVectorizer()),

('tfidf', TfidfTransformer()), ('clf', MultinomialNB()), ])

text_clf.fit(df_train.clean_email, df_train["class"])

predicted = text_clf.predict(df_test.clean_email)

from sklearn import metrics

array = metrics.confusion_matrix(df_test["class"], predicted)

import seaborn as sn

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

df_cm = pd.DataFrame(array, ["Spam","Ham"],

["Spam","Ham"])

sn.set(font_scale=1.4)#for label size

sn.heatmap(df_cm, annot=True,annot_kws={"size": 16})# font size

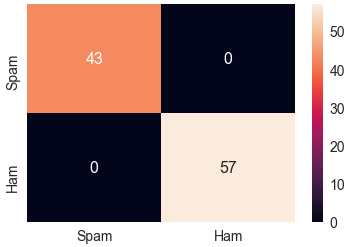

After running the Naive Bayes Algorithm, the output looks something like this:

print metrics.classification_report(df_test["class"], predicted,

target_names=["Spam","Ham"])

We print the metrics to see something like this:

precision recall f1-score support

Spam 1.00 1.00 1.00 43

Ham 1.00 1.00 1.00 57

avg / total 1.00 1.00 1.00 100

In order to assess the model, we put the test data into our created model after which we compare our results. As you can see in the output above, it is visible that out of 43 spam mail, the model successfully identifies all the 43 spam mails. And in the same way, out of 57 ham mail, the model successfully identifies all the 57 Ham mails.

Our application of the Bayes theorem formula in a Naive Bayes Classifier technique is working successfully on this dataset. While it is unusual to have 100% success from a model, we have been able to achieve it due to the small size of training & testing datasets. All we need to ensure is that the model trains with sufficient data. If this happens, it will deliver more accurate results.

For further reading, see here the data science definition guide or the data scientist job description.

Since you’re here…

Curious about a career in data science? Experiment with our free data science learning path, or join our Data Science Bootcamp, where you’ll get your tuition back if you don’t land a job after graduating. We’re confident because our courses work – check out our student success stories to get inspired.