Whether you want to build an automated image captioning tool or a machine-based translation tool, the first question you should ask is — What are recurrent neural networks. Recurrent neural networks (RNNs) give an interesting twist to the simple neural networks by remembering the past data. While simple neural networks just give fixed-sized input and outputs, RNN memorise the previous inputs in order to give sequential data as output.

Understanding What are Recurrent Neural Networks

A recurrent neural network or RNN helps process sequences like sentences, daily stock prices, or even sensor measurements. RNN is a set of algorithms which helps in processing sequences by retaining the memory (or state) of the previous value in the sequence.

Recurrent mainly means that the output of the current time step actually becomes the input for the very next time step. That means, at every element, the model not only considers the current input but also remembers all the previous elements.

Recurrent neural networks are designed to mimic the way human brains think while processing and sequencing. For instance, we consider the entire sentence before speaking or writing instead of just considering the words we want to use. Similarly, the memory of RNNs allows it to learn more about long-term dependencies which in turn allows it to take the entire context of the sequence into account before making the next prediction.

For building RNNs, you can use Keras, the neural network library which is built in Python. Although there are many other neural network libraries that can be used for RNN, Keras is often preferred because of its fast development time, ease of use, and support for Python. With Keras, you can also easily build text generation and predictive models using RNN.

The advantages of using RNN structure include:

- Processing input of any length

- The model size does not increase with the size of the input

- All the computations take historical data into account

- All the weights are shared across time

There are also some disadvantages of using recurrent neural network including:

- It takes a long time for computations as compared to simple neural networks

- It can be difficult to access information a long time ago

- It’s not possible to consider any future input for the current state

Get To Know Other Data Science Students

Sam Fisher

Data Science Engineer at Stratyfy

Joy Opsvig

Data Science Apprentice Engineer at LinkedIn

Garrick Chu

Contract Data Engineer at Meta

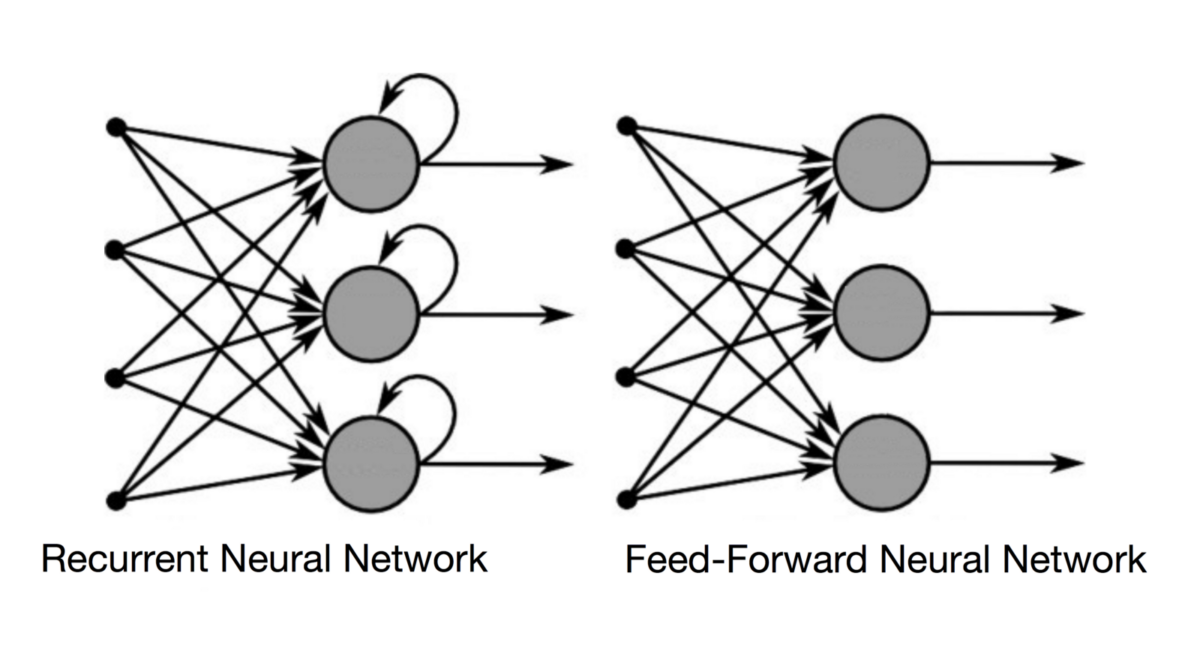

How RNNS Differ from Simple Neural Networks

A simple forward feed neural network is good at learning about patterns between the present set of inputs and outputs. For instance, a neural network can help identify whether an image has a dog in it or not. But then, if you input an image of a cat after an image of a dog, it won’t have any memory or context about the previous dog image. It will classify the new image with the cat in it independently. As a result, simple neural networks are not suitable for sequences which require previous memory or context to make future predictions like predicting stock prices based on the past data. After all, you can’t just arbitrarily predict stock prices. You have to take into account the previous stock values and market patterns to make accurate predictions.

The Architecture of a Basic Recurrent Neural Network

There are four main elements in an RNN architecture — Input, hidden state, weights, and output.

Input: This is the initial input for the network

Hidden state: This acts as the main memory of the network. It is calculated based on previous hidden state and the current input

Weights: The input to hidden connections are directly parameterised through a weight matrix. It also takes into consideration the hidden to hidden recurrent connections and hidden to output connections.

Output: This is the final output of the network

Different Types of Recurrent Neural Networks

1. One to many

These networks have fixed size information as input and give a sequence of data as output. For example, a captioning algorithm which takes an image as the input and gives a sentence caption as the output with a sequence of words.

2. Many to one

These networks take a sequence of information as input and give a fixed size output. For example, a sentiment analysis algorithm that inputs a sentence and then classifies it based on whether it has a positive or negative sentiment.

3. Many to many

It takes a sequence of information as the input and gives another sequence as output. For example, a machine translation where recurrent neural network takes a sentence in one language and translates it into a different language.

Now that you know what a recurrent neural network is, you will know that an RNN algorithm is working behind the scenes the next time you see a speech to text application or a machine-based translation tool.

For further reading, learn more about data science here and find out what a data scientist does.

Since you’re here…

Curious about a career in data science? Experiment with our free data science learning path, or join our Data Science Bootcamp, where you’ll get your tuition back if you don’t land a job after graduating. We’re confident because our courses work – check out our student success stories to get inspired.

![What is Data Science [2022 Guide]](https://www.springboard.com/blog/wp-content/uploads/2016/11/what-is-data-science-2022-guide.png)